I’ve tried a lot of different WordPress hosting companies over the years (Gandi, Dreamhost, SiteGround,…) and Cloudways is by far the best in terms of server performance and customer service.

You can reach a real competent human being (not only a bot) via their chat widget in less than 5 minutes 24 hours per day to help you solve any technical issue. Their online documentation is also very detailed. And you can easily monitor the live performance of your server, which brings us to the topic of this piece.

How to reduce high CPU Usage on your Cloudways server

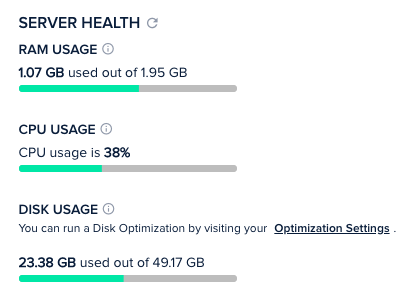

The only issue I experienced for a few weeks was an overload on one of my servers, for a website generating ~ 1,500 daily visits. The CPU Usage was always close to 100%, triggering email warnings from Cloudways.

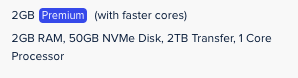

Here are the specs of this server (2GB RAM, 50GB NVMe Disk, 2TB Transfer, 1 Core Processor, on Digital Ocean, EU – priced $26 per month).

Having another website on another similar Cloudways server with roughly the same amount of daily visits, I found it a bit weird that my first server seemed to be frequently overloaded while the second one was performing decently, always under 50% of CPU load.

So I reached out to Cloudways customer service, on a Saturday night after 8PM. They swiftly answered my query and we started investigating the issue together. I had a closer look at the server logs via the browser-based SSH Terminal provided by Cloudways. We noticed a flood or requests from a series of SEO bots (ahrefs, semrush, dataforseo,…).

Cloudways invited me to add a code snippet to my .htaccess file (which you can find at the root of your WordPress installation, under public_html, via a FTP client such as Filezilla).

RewriteEngine On

RewriteCond %{HTTP_USER_AGENT} ^.*(dataforseobot|AhrefsBot|SemrushBot|Proximic|PetalBot|dotbot|BLEXBot|MJ12bot).*$ [NC]

RewriteRule .* - [F,L]Those instructions prevent a list of bots to get data from my site. In the Apache logs, their requests are now marked with a 403 error code (instead of a successful 200 code). It still has a tiny impact on the server but nothing comparable to an actual successful request.

Be careful not to prevent access to Google, Bing, Yandex or other relevant search engines.

Important note: at first I inserted the code snippet at the end of my .htaccess file, which didn’t seem to deliver the expect result. Cloudways Customer Support advised to bring the snippet at the top of the .htaccess file. It worked perfectly. My CPU Usage is now back to acceptable levels, hovering around 50%. I might have to scale the server capacity when I’ll have significantly more traffic.

You can implement exactly the same technique with other hosting providers.

Subscribe to my weekly newsletter packed with tips & tricks around AI, SEO, coding and smart automations

Subscribe to my weekly newsletter packed with tips & tricks around AI, SEO, coding and smart automations